When we are reading paper, we may find this training method:

We use the Adam optimizer with learning rate decay, which starts from 0.001 and is reduced to 0.0005, 0.0003, and 0.0001 after 500K, 1M and 2M global steps, respectively

It means we will change learning rate by global steps. In this tutorial, we will introduce you how to implement it in tensorflow.

Adam optimizer in tensorflow

We can create an adam optimizer using :

optimizer = tf.train.AdamOptimizer(lr)

Here lr is our learning rate.

How to change learning rate by global step in tensorflow?

In order to change learning rate by training step, we should pass lr value by tf.placeholder(). Here is an example:

import tensorflow as tf

import tensorflow as tf

import numpy as np

g = tf.Graph()

with g.as_default():

session_config = tf.ConfigProto(

allow_soft_placement=True,

log_device_placement=False

)

session_config.gpu_options.allow_growth = True

sess = tf.Session(config=session_config, graph=g)

with sess.as_default():

batch = tf.placeholder(shape=[None, 40], dtype=tf.float32) # input batch (time x batch x n_mel)

lr = tf.placeholder(dtype=tf.float32) # learning rate

w = tf.layers.dense(batch, 10)

loss = tf.reduce_mean(w)

# Define Training procedure

global_step = tf.Variable(0, name="global_step", trainable=False)

update_ops = tf.get_collection(tf.GraphKeys.UPDATE_OPS)

with tf.control_dependencies(update_ops):

optimizer = tf.train.AdamOptimizer(lr)

grads_and_vars = optimizer.compute_gradients(loss)

train_op = optimizer.apply_gradients(grads_and_vars, global_step=global_step)

sess.run(tf.global_variables_initializer())

# start train

rate = 0.1

data = np.random.normal(size = [3, 40])

for i in range(1000):

current_step = tf.train.global_step(sess, global_step)

if current_step<100:

rate = 0.1

elif current_step < 200:

rate = 0.05

elif current_step < 300:

rate = 0.01

else:

rate = 0.001

feed_dict = {

batch: data,

lr: rate

}

_, step, ls, lrate = sess.run([train_op, global_step, loss, optimizer._lr], feed_dict)

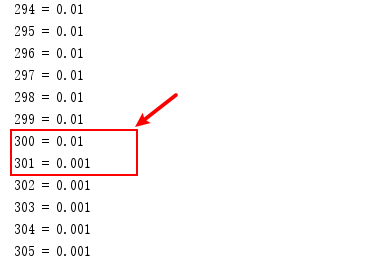

print(step, "=", lrate)

In this example, we will pass different learning rate rate to tensorflow model lr = tf.placeholder(dtype=tf.float32)

We can use

current_step = tf.train.global_step(sess, global_step)

to get global training step.

Run this code, we will see: